딥러닝은 이미지 인식, 자연어 처리, 음성 인식 등 다양한 영역에서 매우 성공적으로 사용되고 있다.

딥러닝은 히든레이어가 많다는 것이 특징이다

- 딥러닝에서 "히든 레이어"는 입력층(input layer)과 출력층(output layer) 사이에 있는 중간 레이어를 가리킨다. 히든 레이어가 많을수록, 모델은 더 복잡한 패턴이나 관계를 학습할 수 있게 된다. 이는 입력과 출력 간의 복잡한 비선형 관계를 모델링하는 데 도움이 됩니다.

- 더 많은 히든 레이어를 가진 신경망은 더 많은 파라미터를 가지기 때문에, 더 많은 데이터와 연산 리소스를 필요로 한다. 그러나 충분한 데이터와 리소스가 제공된다면, 깊은 신경망은 매우 복잡한 문제를 해결하는 데 효과적일 수 있다.

금융상품 갱신(0 또는 1) 여부 예측하는 ANN 만들기

Churn_Modelling.csv 파일을 보면, 고객 정보와 해당 고객이 금융상품을 갱신했는지 안했는지의 여부에 대한 데이터가 있다. 이 데이터를 가지고 갱신여부를 예측하는 딥러닝을 구성하시오.

df

nan값 정리하기

df.isna().sum()

RowNumber 0

CustomerId 0

Surname 0

CreditScore 0

Geography 0

Gender 0

Age 0

Tenure 0

Balance 0

NumOfProducts 0

HasCrCard 0

IsActiveMember 0

EstimatedSalary 0

Exited 0

dtype: int64

특성열과 대상열로 나누기

특성 열(X)은 데이터셋에서 각각의 관측치에 대한 설명변수를 나타낸다

대상 열(y)은 예측하려는 값이 포함된 열이다

y = df['Exited']

X = df.loc[: , 'CreditScore':'EstimatedSalary' ]

X

문자열을 숫자열로 바꾸기

X['Geography'].nunique() # 원핫 인코딩

3

X['Gender'].nunique() # 레이블 인코딩

2

from sklearn.preprocessing import OneHotEncoder, LabelEncoder

from sklearn.compose import ColumnTransformer

le = LabelEncoder()

X['Gender'] = le.fit_transform(X['Gender'])

sorted(X['Geography'].unique())

['France', 'Germany', 'Spain']

ct = ColumnTransformer(

[('encoder', OneHotEncoder(), [1])],

remainder='passthrough'

)

X = ct.fit_transform(X)

Dummy Variable Trap (더미)

X = pd.DataFrame(X).drop(0, axis=1).values

성능을 위해서 하나의 컬럼을 삭제하는 것이다

원 핫 인코딩한 결과에서, 가장 왼쪽의 컬럼은 삭제해도 데이터를 표현하는 데는 아무 문제 없다.

'France', 'Germany', 'Spain'

1 0 0

0 1 0

0 0 1

맨 왼쪽 France 컬럼을 삭제해도,

0 0 => France

1 0 => Germany

0 1 => Spain

아무 문제가 없기 때문이다

피쳐스케일링

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

X = sc.fit_transform(X)

train과 test로 나누기

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 0)

모델링 (ANN)

import tensorflow as tf

from tensorflow import keras

from keras.models import Sequential

from keras.layers import Dense # Dense는 완전 연결 층을 의미한다

model = Sequential() # Sequential 인공지능(API)을 사용하여 순차적으로 층을 쌓아간다

model.add( Dense(units = 8, activation='relu',input_shape=(11,)) )

# 입력 데이터를 받는 층. 11개의 입력 특성을 받아 8개의 뉴런으로 연결된다. 히든레이어의 활성화 함수는 ReLU를 사용한다

model.add( Dense(6, 'relu') )

# 은닉층. 입력 특성을 받아들이고 6개의 뉴런을 가지며, 히든레이어의 활성화 함수는 ReLU를 사용한다

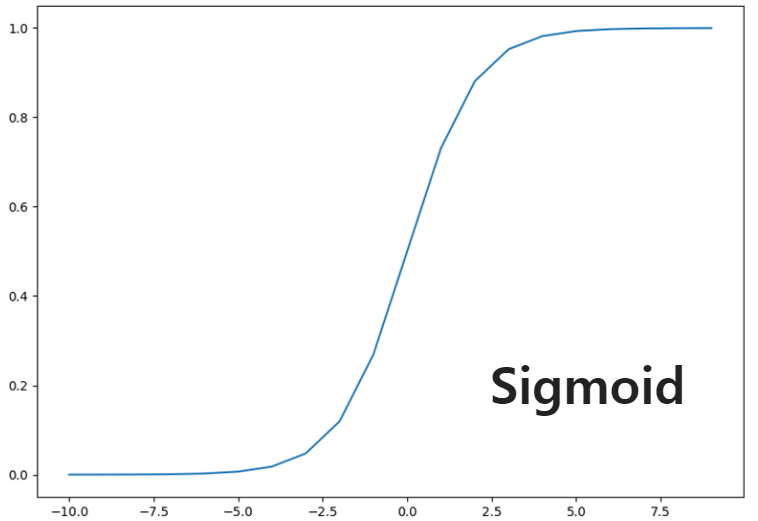

model.add( Dense(1, 'sigmoid') )

# 출력층. 하나의 뉴런을 가지며, 활성화 함수로는 시그모이드(Sigmoid) 함수가 사용된다. 이는 이진 분류 문제를 다룰 때 주로 사용되는 함수로, 출력값을 0과 1 사이의 확률 값으로 변환시켜준다.

model.summary() # 모델의 구조를 요약하여 보여주는 함수

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 8) 96

_________________________________________________________________

dense_1 (Dense) (None, 6) 54

_________________________________________________________________

dense_2 (Dense) (None, 1) 7

=================================================================

Total params: 157

Trainable params: 157

Non-trainable params: 0

_________________________________________________________________

요약을 통해 각 층의 구성과 출력 형태, 파라미터 수를 확인할 수 있다

Param #는 해당 층의 가중치(weight)와 편향(bias)을 나타내는 파라미터 수를 의미한다

컴파일(Compile)

컴파일은

1. 옵티마이저(optimizer) # 오차를 줄이는 가장 빠르고 효율적으로 수행할 최적(최저비용)의 처리경로를 생성해준다

2. 로스펑션(loss function 오차함수, 손실함수) 셋팅

- 2개로 분류하는 문제의 loss는 'binary_crossentropy'로 설정

- ex ) 만약 mse로 설정하면 모델이 훈련될 때 MSE를 최소화하도록 학습된다

3. 검증방법 셋팅

순서로 진행한다

model.compile(optimizer='adam',loss='binary_crossentropy',metrics=['accuracy'])

model.fit( X_train, y_train, batch_size = 10, epochs = 20)

딥러닝에서 "에포크(epochs)"란 한 번의 학습 과정에서 전체 데이터셋이 모델에 대해 한 번 통과하는 횟수를 말한다

즉, 에포크란 모델이 훈련 데이터셋 전체를 몇 번이나 반복해서 학습하는지를 나타내는 지표를 뜻한다

검사(Test) 하기

model.evaluate(X_test, y_test)

63/63 [==============================] - 0s 2ms/step - loss: 0.3386 - accuracy: 0.8570

[0.3385504186153412, 0.8569999933242798]

# confusion matrix로 확인하기

from sklearn.metrics import confusion_matrix, accuracy_score

y_pred = model.predict(X_test)

y_pred = (y_pred > 0.5)

cm = confusion_matrix(y_test, y_pred)

print(cm)63/63 [==============================] - 0s 1ms/step

[[1496 99]

[ 187 218]]

(1496 + 218) / cm.sum() #정확도

0.857

accuracy_score(y_test, y_pred) #정확도

0.857

새로운 데이터 입력받았을 때

new_data2 = [{'CreditScore': 600,'Geography':'France','Gender':'Male', 'Age':40, 'Tenure':3, 'Balance':60000, 'NumOfProducts':2,'HasCrCard': 1, 'IsActiveMember':1, 'EstimatedSalary':50000}]

# 이렇게 [{키-값}]쌍으로 이루어진 것을 JSON데이터 형태라고 한다

# JSON(JavaScript Object Notation)은 데이터를 저장하고 전송하기 위한 경량의 데이터 형식이다.

# JSON 데이터에서 객체는 중괄호({})로 표시되며, 배열은 대괄호([])로 표시된다

new_data = pd.DataFrame(new_data2)

#라벨인코딩 해주기

new_data['Gender'] = le.transform(new_data['Gender'])

#원핫인코딩 해주기

new_data = ct.transform(new_data)

#더미 (원핫인코딩에서 첫번째 열 제거하는 것)

new_data = pd.DataFrame(new_data).drop(0, axis=1).values

#피처스케일링

new_data = sc.transform(new_data)

#예측하기

y_pred = model.predict(new_data2)

(y_pred > 0.5).astype(int)

array([[0]])

결과 : 새로운 데이터의 고객은 갱신하지 않을 것이다.

'ML (MachineLearning)' 카테고리의 다른 글

| 흑백 이미지 데이터셋을 AI에게 판별시켜, 카테고리의 정답을 맞추는 머신러닝 기법 (0) | 2024.04.18 |

|---|---|

| GridSearch 를 이용한 최적의 하이퍼 파라미터 찾기 (0) | 2024.04.17 |

| 머신러닝 알고리즘 개념 요약 (0) | 2024.04.16 |

| 하이라키 클러스터링(Hierarchical Clustering) : 계층적 군집 (0) | 2024.04.16 |

| K-Means 알고리즘 (0) | 2024.04.16 |