https://www.tensorflow.org/datasets/catalog/fashion_mnist?hl=ko

패션_엠니스트 | TensorFlow Datasets

이 페이지는 Cloud Translation API를 통해 번역되었습니다. 패션_엠니스트 컬렉션을 사용해 정리하기 내 환경설정을 기준으로 콘텐츠를 저장하고 분류하세요. Fashion-MNIST는 60,000개의 예제로 구성된

www.tensorflow.org

import numpy as np

import tensorflow as tf

from tensorflow.keras.datasets import fashion_mnist #fashion_mnist 사용

(X_train, y_train), (X_test, y_test) = fashion_mnist.load_data()

사진 가져오기

X_train.shape

(60000, 28, 28)- 사진 60000개 28행 28열이다.

<첫 번째 사진 가져오기>

X_train[0 , : , : ] # 3차원이므로 콤마가 2개이다

ndarray (28, 28) show data

y_train[0]

9

- 첫 번째 사진의 정답. fashion mnist의 label=9는 Ankel boots이다.

<두 번째 사진 가져오기>

X_train[1 , : , : ]

ndarray (28, 28) show data

y_train[1]

0

- 두 번째 사진의 정답. fashion mnist의 label=0는 T-shirt/top이다.

피처스케일링

이미지는 범위가 0~255까지 이기때문에, 피처스케일링은 /255를 하면 된다

피처스케일링하면 0과 1 사이의 값으로 나타낼 수 있다

X_train = X_train/255.0 #(255로 나누면 int, 255.0으로 나누면 float으로 나누겠다는 의미이다)

X_test = X_test/255.0

데이터셋을 reshape하기

X_train.shape

(60000, 28, 28)- 사진 60000개 28행 28열이다.

데이터를 reshape 해야 하는 이유는?

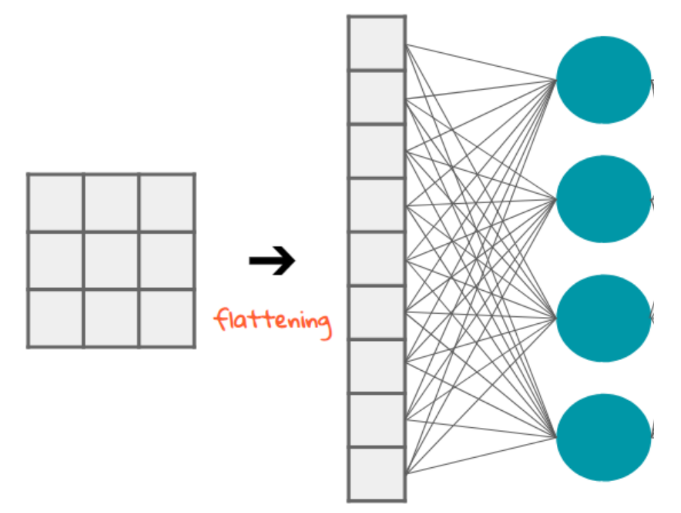

딥러닝은 아래와 같은 과정으로 이행되는데, X_train의 데이터는 사진으로, 네모난 형태를 가지고있다.

네모난 형태의 여러 픽셀들을 딥러닝하여 검정색은0, 흰색은1로 하여 인공지능이 정답을 찾아가는 학습과정을 따르기에,

딥러닝의 과정을 거치려면 flattening을 통해 하나의 열로 만들어줘야 한다.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

def build_model():

model = Sequential()

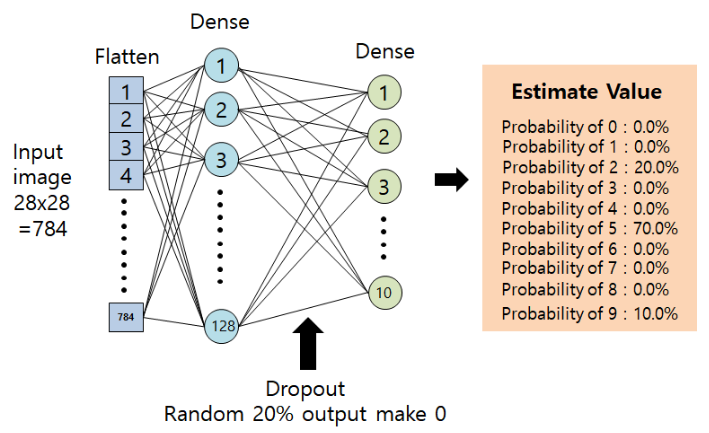

model.add( Flatten() ) # 입력 레이어 Flatten으로, 784개로 설정된다 (28열*28행)

model.add( Dense(128, 'relu') ) # 히든 레이어는 128개로 설정하였다. 설정하는 사람 마음대로 설정하면 된다

model.add( Dense(10, 'softmax')) # 레이블이 10개(0~9까지)이므로, 출력레이어는 10개로 설정한다

model.compile('adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

return model

# 3개 이상의 분류문제는 loss function을 sparse_categorical_crossentropy로 한다

딥러닝의 출력값은 다 더해서 1이 되어야 하고, 확률로 결과가 나타난다 'softmax'라는 액티베이션 펑션을 이용하면 여러개의 출력값의 합이 1로 나타난다

model = build_model()

from tensorflow.keras.callbacks import EarlyStopping

early_stopping = EarlyStopping(patience=10)

model = build_model()

epoch_history = model.fit(X_train, y_train, epochs= 1000, validation_split=0.2, callbacks=[early_stopping])

실행시키면 loss, accuracy, val_loss, val_accuracy이 에포크마다 출력된다.

얼리스탑에 관한 내용은 아래 글을 참조한다

https://codebunny99.tistory.com/31

GridSearch 를 이용한 최적의 하이퍼 파라미터 찾기

! pip install scikeras from scikeras.wrappers import KerasClassifier from sklearn.model_selection import GridSearchCV from keras.models import Sequential from keras.layers import Dense def build_model(optimizer = 'adam') : model = Sequential() model.add(De

codebunny99.tistory.com

결과 출력하기

model.evaluate(X_test,y_test)

[==============================] - 1s 2ms/step - loss: 0.3772 - accuracy: 0.8818

[0.3771723508834839, 0.8817999958992004]

# loss와 val_loss 그래프로 보기

plt.plot(epoch_history.history['loss'])

plt.plot(epoch_history.history['val_loss'])

plt.legend(['loss','val_loss'])

plt.show()

- 과거에 대한 학습데이터인 loss는 과거데이터를 학습할 때마다 과거데이터에 대한 오차가 감소하지만,

val_loss, 즉 미래에 들어올 데이터에 대한 오차는 늘어나기도 줄어들기도 한다는 것을 볼 수 있다.

그러나 대체로 loss는 줄어드는 현상을 보인다.

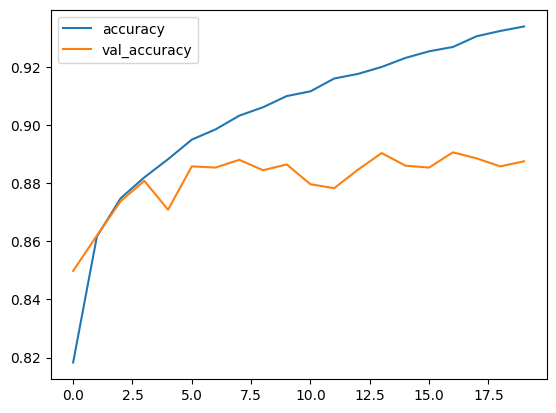

# accuracy와 val_accuracy 그래프로 보기

plt.plot(epoch_history.history['accuracy'])

plt.plot(epoch_history.history['val_accuracy'])

plt.legend(['accuracy','val_accuracy'])

plt.show()

- 과거데이터에 대한 정확도는 과거데이터가 학습될수록 늘어나지만, 미래에 대한 예측에 대한 정확도는 새로운데이터에 대한 예측이므로 정확해지기도, 부정확해지기도 하지만 대체로 올라가는 현상을 보인다는 것을 알 수 있다.

confusion matrix

import seaborn as sb

from sklearn.metrics import confusion_matrix

y_pred = np.argmax(model.predict(X_test), axis=1)

# argmax란 주어진 배열에서 최댓값을 가진 요소의 인덱스를 반환하는 함수이다.

# argmax를 쓰는 이유는, 출력값 10개 중에서 가장 높은 확률을 가져오는 max값의 인덱스번호(즉, 라벨 번호)가 정답인 y_test와 일치하는지를 알아야 하기 때문이다.

cm = confusion_matrix(y_test, y_pred)

cm

[==============================] - 1s 3ms/step

array([[783, 3, 26, 27, 3, 0, 150, 0, 8, 0],

[ 1, 972, 1, 19, 5, 0, 2, 0, 0, 0],

[ 12, 1, 810, 12, 124, 0, 41, 0, 0, 0],

[ 15, 5, 10, 904, 29, 0, 31, 0, 5, 1],

[ 0, 0, 94, 33, 845, 0, 26, 0, 2, 0],

[ 0, 0, 0, 1, 0, 946, 0, 23, 3, 27],

[ 75, 0, 113, 31, 100, 0, 674, 0, 7, 0],

[ 0, 0, 0, 0, 0, 11, 0, 974, 1, 14],

[ 5, 0, 10, 4, 5, 2, 11, 4, 959, 0],

[ 0, 0, 0, 0, 0, 5, 1, 43, 0, 951]])

np.diagonal(cm).sum() / cm.sum() # 정확도 = 대각선의 값의 합 / 전체 합

0.8818

'ML (MachineLearning)' 카테고리의 다른 글

| 딥러닝 : CNN(합성곱 신경망, Convolutional Neural Network), 컬러사진 식별하기 (0) | 2024.04.18 |

|---|---|

| tensorflow(텐서플로우)에서 def를 저장하고 불러오는 방법 (0) | 2024.04.18 |

| GridSearch 를 이용한 최적의 하이퍼 파라미터 찾기 (0) | 2024.04.17 |

| 딥러닝 : Neural Networks 으로 Classification(분류) 하기 (2) | 2024.04.16 |

| 머신러닝 알고리즘 개념 요약 (0) | 2024.04.16 |